A multidisciplinary team of researchers has developed a deep-learning framework for improving endoscopy to aid the detection of cancer and other gastrointestinal diseases.

Cancers detected at an earlier stage have a much higher chance of being treated successfully. The main method for diagnosing cancers of the gastrointestinal tract is endoscopy, when a long flexible tube with a camera at the end is inserted into the body, such as the oesophagus, stomach or colon, to observe any changes in the organ lining.

Endoscopic methods such as radiofrequency ablation can also be used to prevent pre-cancerous regions from progressing to cancer if they are detected in time.

Unfortunately, during conventional endoscopy, the more easily treated pre-cancerous conditions and early stage cancers are harder to spot and often missed, especially by less experienced endoscopists.

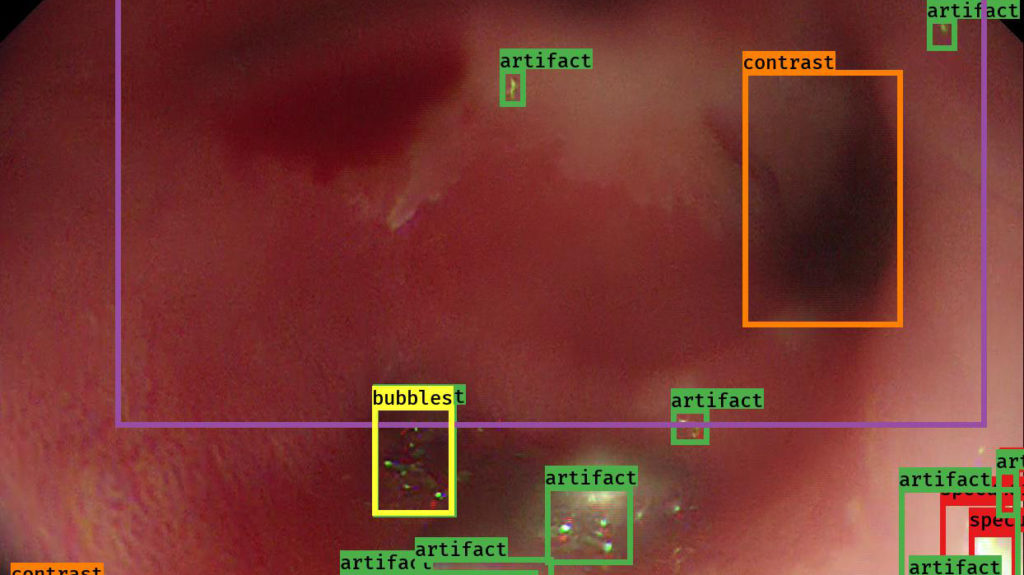

Cancer detection is made even more challenging by artefacts in the endoscopy video such as bubbles, debris, overexposure, light reflection and blurring, which can obscure key features and hinder efforts to automatically analyse endoscopy videos.

In an effort to improve the quality of video endoscopy, a team of researchers from the Institute for Biomedical Engineering (Sharib Ali and Jens Rittscher), the Translational Gastroenterology Unit (Barbara Braden, Adam Bailey and James East) and the Ludwig Institute for Cancer Research (Felix Zhou and Xin Lu) have developed a deep-learning framework for quality assessment of endoscopy videos in near real-time.

This framework, published in the journal Medical Image Analysis, is able to reliably identify six different types of artefacts in the video, generate a quality score for each frame and restore mildly corrupted frames.

Frame restoration can help in building visually coherent 2D or 3D maps for further analysis. In addition, providing quality scores can help trainees to assess and improve their endoscopy screening performance.

Future work aims to employ real-time computer algorithm-aided analysis of endoscopic images and videos, which will enable earlier identification of potentially cancerous changes automatically during endoscopy.

This work was supported by the NIHR Oxford Biomedical Research Centre, the Engineering and Physical Sciences Research Council, the Ludwig Institute for Cancer Research and Health Data Research UK.